(Post 1 of a series on quick research and usability techniques. Start-up’s can use these techniques fairly easily to connect to and understand their end users better, as well as maintain usability standards on their products.)

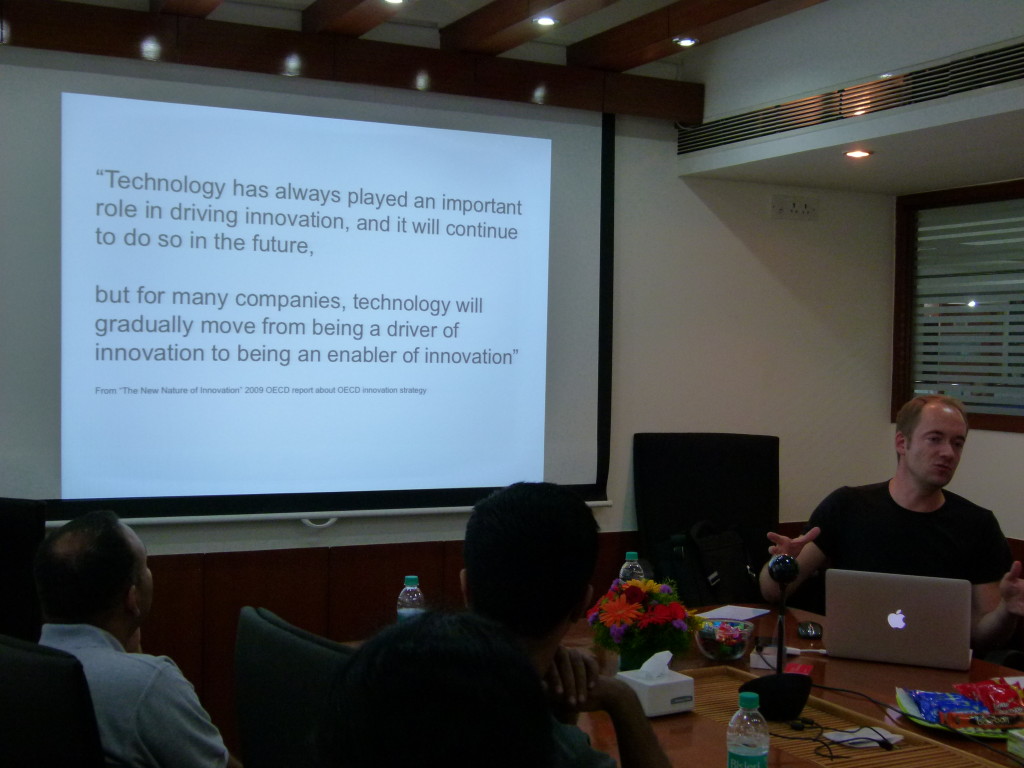

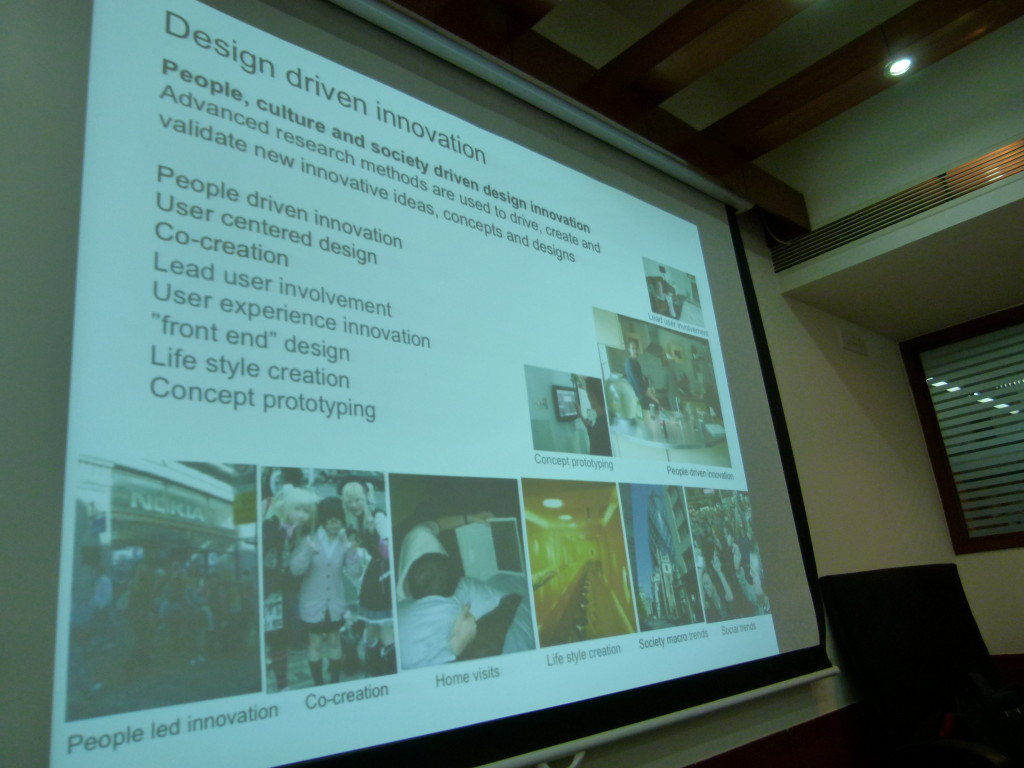

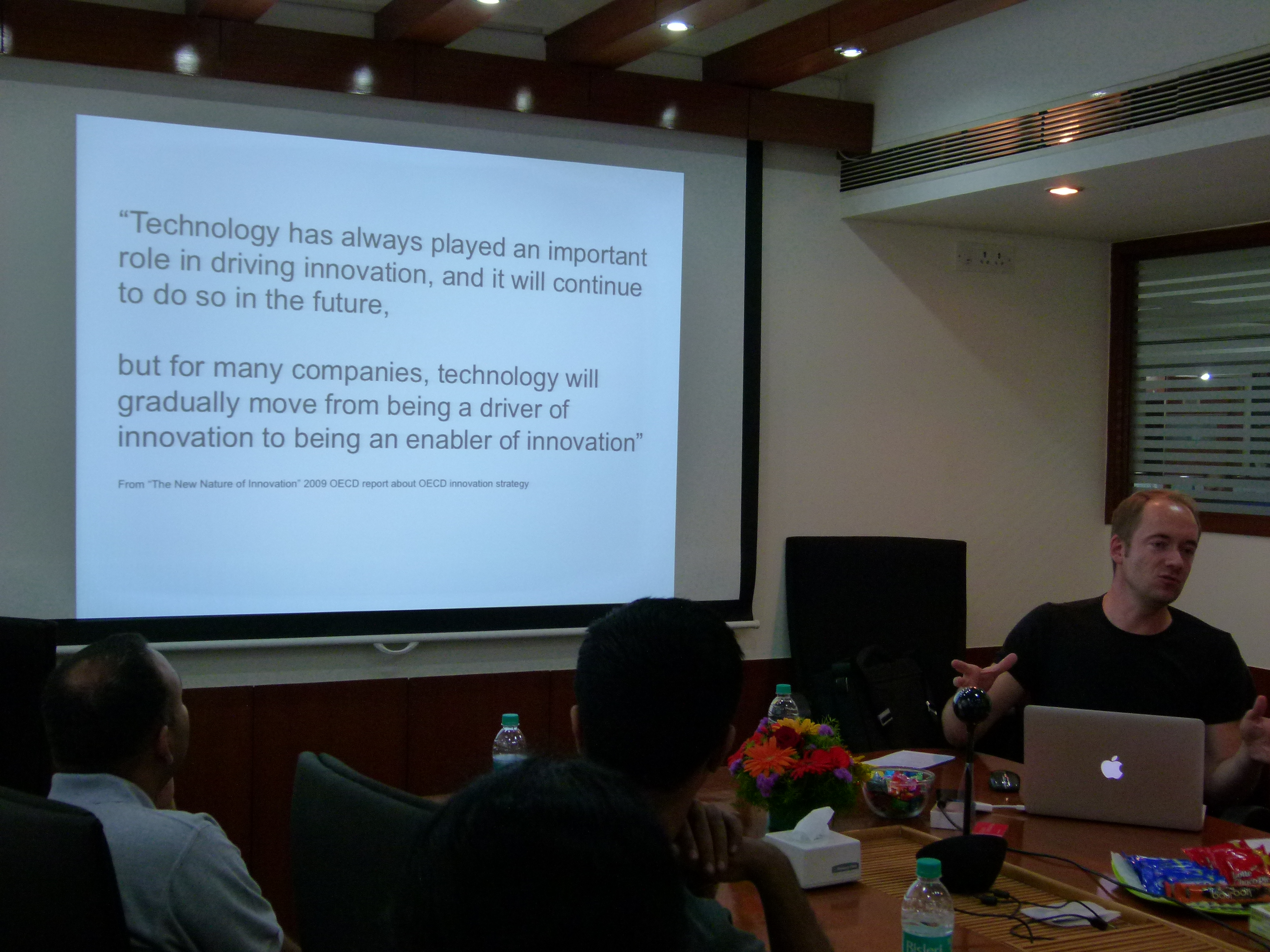

ProductNation in collaboration with a few like-minded design professionals, recently put together an informal forum for designers, engineers, product managers & entrepreneurs in the Delhi NCR region. The objective of this forum was to evangelize and encourage a dialog around Design Thinking among the start-up community.

I conducted a short workshop on this topic at the forum’s launch event – a day long interactive meet up – hosted at the MakeMyTrip office in Gurgaon.

During the workshop, I introduced participants to the concept of Design Thinking and touched upon a few design research and usability methods that they could use to support design thinking within their organizations. A brief recap:

Design Thinking is an approach to design rather than a specific technique or method.

A core principle central to supporting design thinking is iteration. A ‘prototype and test’ focused approach fuelled by empathy for the people who will ultimately use the product, is recommended to be followed throughout the product development lifecycle.

There are several user research methods that can help companies connect to and understand their end users better. Guerrilla Research techniques in particular, are especially useful in context to the start-up environment – Where time is of essence, budget is limited, teams are small, people are typically multitasking and playing multiple roles.

Guerrilla Research includes research techniques that can be done more quickly, with less effort and budget, as compared to formal or traditional user research techniques. Remote / Informal Usability Testing, Man on the Street Interviews, Micro-surveys, Fake Doors, ‘Design the Box’ and Personal Inventory are a few examples of quick research techniques that can be learnt and implemented fairly well by a newbie researcher / anyone on a start-up team doubling up as a researcher.

In this first post, I want to introduce a discount usability engineering method called the ‘Expert Usability Review.’

Like Guerrilla Research methods, a Usability Review is an effective way to quickly identify usability and ease-of-use issues on a product. However, unlike user research, this method does not involve talking to end users at all.

What it involves is ‘expert evaluators’ reviewing a product, to identify usability and ease of use issues across different UI areas like Navigation and Structure, Layout, Visual Design, Interaction, Error Handling, Content etc. The experts are able to identify issues by drawing on their own experience in the areas of design and usability.

Subjectivity is minimized and issue validity maximized (or attempted to!) by ensuring that issues identified map onto existing and recognized design guidelines / principles / best practices or heuristics.

The issues identified through review, can then be fixed as part of an iterative design process. The kinds of issues that a Usability Review typically identifies are the ‘low hanging fruit’ or obvious usability problems.

Doing a review helps to highlight any aspect of an interface that violates usability and design principles.

The issues that surface through a review are different from the type of issues that come up while using user based methods like Usability Testing. So a review is a good complement to other user research techniques that may also be employed.

(More on typical issues found through Heuristic Evaluation and Usability Testing vs. Expert Reviews)

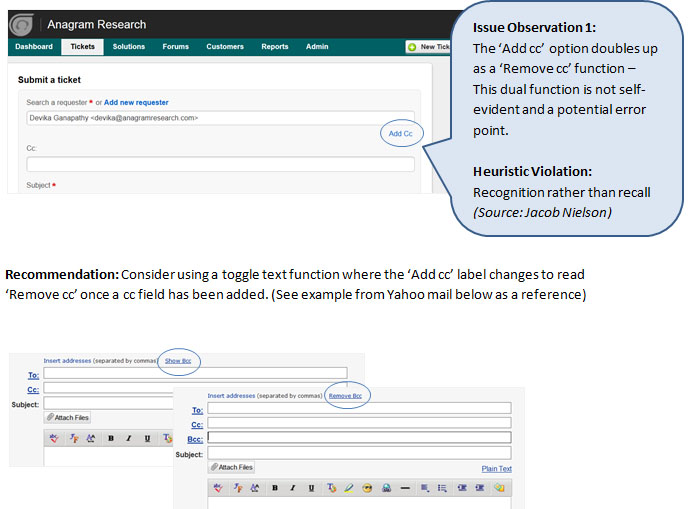

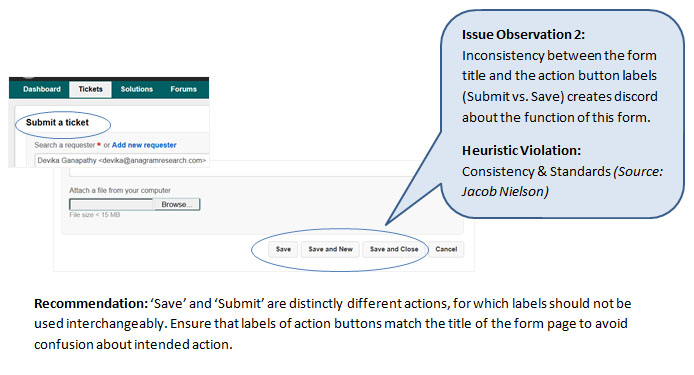

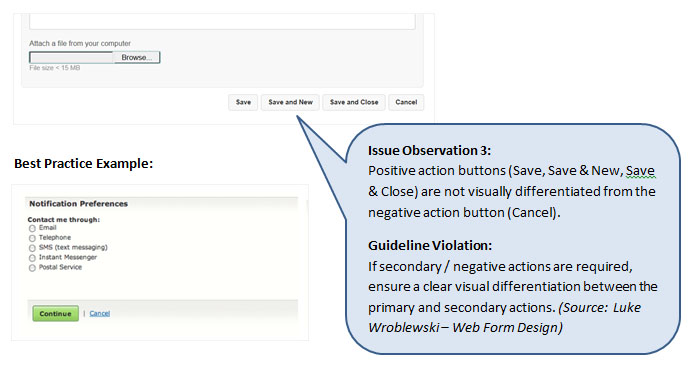

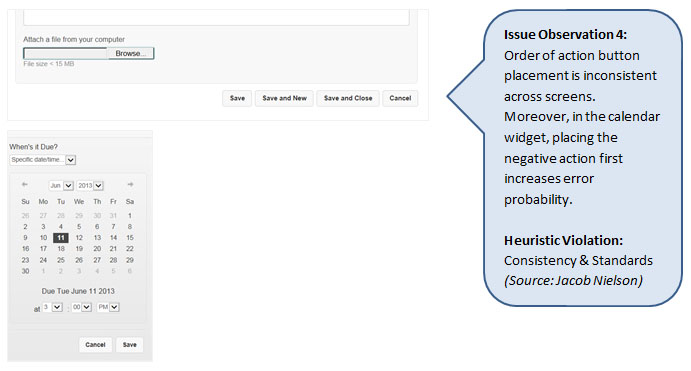

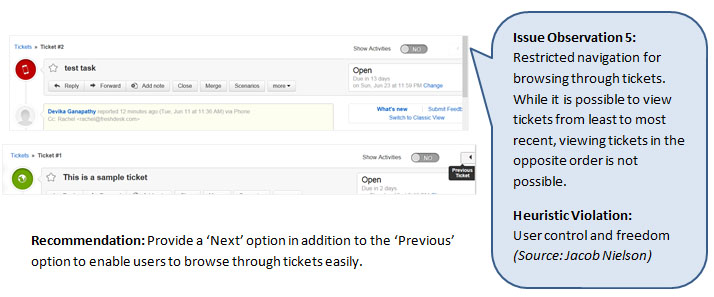

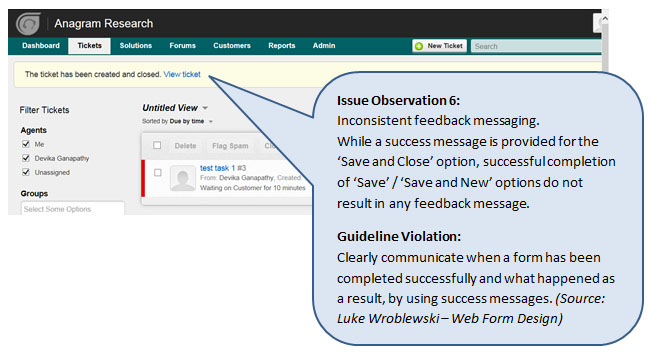

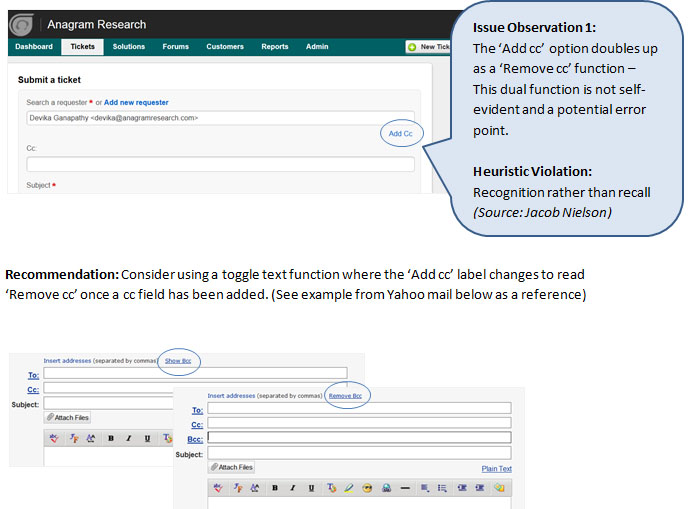

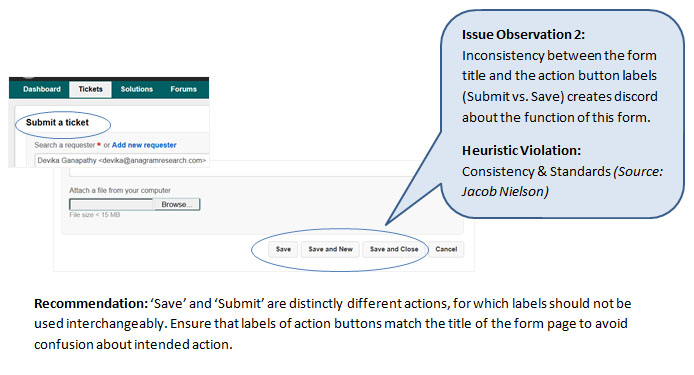

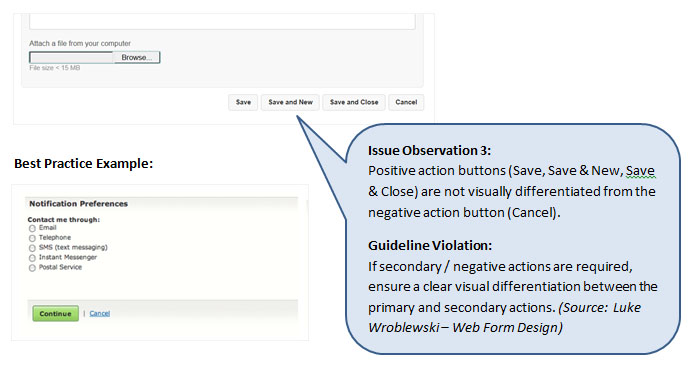

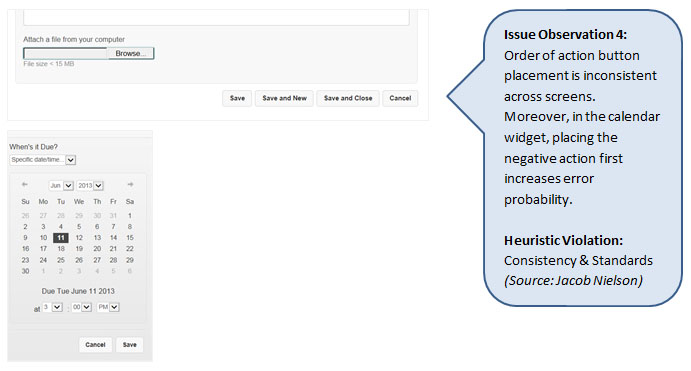

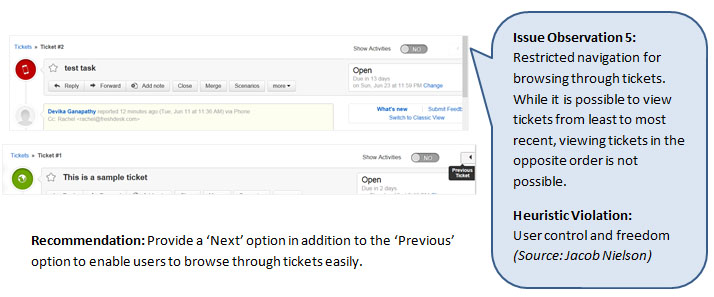

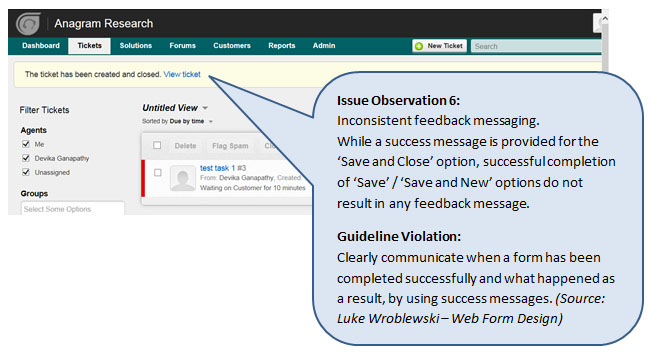

To demonstrate the type of issues typically found through a Usability Review, I evaluated the ‘Submit Ticket’ function on Freshdesk. Freshdesk is an online customer support software, targeted at small and medium sized businesses looking for a cloud based solution.

Here are some of the issues that I found:

Note: This is not an exhaustive review of the ‘Submit Ticket’ page, but a few example issues that help illustrate the type of issues that may be found through a usability review.

The products selected to be used as examples in this series of posts are products that are well designed in general. This highlights the importance of iterative design / the type of issues that can be unearthed even in well-designed products, by using various usability and research techniques.

The examples shown above are just a fraction of the issues that a Usability Review could highlight.

The examples shown above are just a fraction of the issues that a Usability Review could highlight.

The success and effectiveness of this technique is dependent on the experience and skill of the reviewer. A review is typically done by three or four experts in the field of usability and design.

This method is best suited for start-up’s who have access to skilled and experienced usability / design professionals who can conduct a Usability Review.

Post 2 coming up soon, will introduce a related technique called ‘Heuristic Evaluation’.

With similar goals to an Expert Usability Review, a Heuristic Evaluation is a relatively easier starting point for novice researchers – Ideal for start-ups who don’t have a formal design / usability team in place, but want to try their hand at usability evaluation.

Are you a design thinker evangelizing or facilitating user research and usability methods within your start-up?

We would love to hear about your experience / answer any questions that you have about the methods that you used.

We also invite members of the start-up community to volunteer their screens / functions for use as examples in upcoming posts showcasing additional research techniques. Email me at devika(at)anagramresearch.com to check whether your screen is eligible for selection.

The

The

The examples shown above are just a fraction of the issues that a Usability Review could highlight.

The examples shown above are just a fraction of the issues that a Usability Review could highlight.