The passage of the Digital Personal Data Protection Bill 2023 (DPDP) by the Lok Sabha is significant in more ways than one. The Bill aims to enforce and promote lawful usage of digital personal data and stipulates how organisations and individuals should navigate privacy rights and handle personal data.

Creating effective mechanisms to enable data governance has become one of the top priorities for countries around the world. The challenge for policymakers is designing legal and regulatory frameworks that clearly lay down the rights of data principals and obligations for data fiduciaries.

The Digital Data Protection Bill is a much-needed step in this direction, taken after months of deliberations and discussions. Such normative frameworks are critical to secure regulatory certainty for enterprises. However, innovative technical measures are required to support their operationalisation.

In the past couple of years, India has made significant strides in adopting a techno-legal approach to data governance. Through this approach, India is building technical infrastructure for authorising access to datasets that embed privacy and security principles in its design.

Data also lies at the heart of AI innovations that can address significant global challenges. India’s unique techno-legal approach to data governance is applicable across the life cycle of machine learning systems. It complements the country’s ambition of supporting its growing AI start-up ecosystem while providing privacy guarantees.

As part of India Stack, the Data Empowerment and Protection Architecture (DEPA) launch in 2017 was India’s paradigm-defining moment for the inference cycle of the machine learning life cycle. It proposed the setting up of Consent Managers (CMs), also known as Account Aggregators in the financial sector.

This approach, also mentioned in the current iteration of the DPDP (Chapter 2, [Sections 7-9]), ensures individuals can exercise control over their data and can provide revocable, granular, auditable, and secure consent for every piece of data using standard Application Programming Interface (APIs). The secured consent artefact records an individual’s consent for the stated purpose.

It allows users to transfer their data from those data businesses that hold it to those that have to use it to provide individuals certain services while ensuring purpose limitation. For instance, individuals can share their financial data residing within their banks with potential loan service providers to get the best loan package.

DEPA is India’s attempt at securing a consent-based data-sharing framework. It has facilitated the financial inclusion of millions of its citizens. Eight of India’s largest banks were early adopters of the framework starting in 2021. Currently, 415 entities, including CMs, Financial Information Providers, and Users, participate across various DEPA implementation stages.

However, the training cycle of an AI model demands substantially more data to make accurate predictions in the inference cycle. As such, there is a need for more of such robust technical solutions that disrupt data silos and connect data providers with model developers while providing privacy and security guarantees to individuals who are the real owners of their own data.

With DEPA 2.0, India is already experimenting with a solution inspired by confidential computing called the Confidential Computing Rooms, or CCRs. CCRs are hardware-protected secure computing environments where sensitive data can be accessed in an algorithmically controlled manner for model training.

These algorithms create an environment for data to be used while ensuring compliance with privacy and security guarantees for citizens are upheld and data does not exchange hands. Techniques like differential privacy introduce controlled noise or randomness into the training process to protect individuals’ privacy by making it harder to identify them or extract sensitive information.

To make CCR work, model certifications and e-contracts are essential elements. The model sent to CCR for training has to be certified to ensure it upholds privacy and security guidelines, and the e-contracts are required to facilitate authorized and auditable access to datasets. For example, loan providers can authorise access to a representative sample of the datasets residing with them to model developers via CCR for model training. This arrangement will be facilitated via e-contracts once the CCR verifies the validity of the model certification provided by the modeller.

India’s significant progress with technical measures that are aligned with domestic legal frameworks provides it with a head start in the AI innovation landscape. Countries all across the globe are struggling to find solutions to facilitate personal data sharing for model development that prioritises security and privacy. Multiple lawsuits have recently been filed against OpenAI across numerous jurisdictions for unlawfully using personal data to train their models.

India’s unique approach to data governance, where both technical and legal frameworks fit like a puzzle and balance the thin line of promoting AI innovation while providing privacy guarantees, is well-positioned to guide global approaches to data governance.

In a quiet and disciplined fashion, over the last six years, India has put the critical techno-legal pieces in place for becoming a significant AI player in the world alongside US and China. Like them, we have continental-scale data and the talent to shape our future. With the passage of the DPDP Bill, we are now one step closer to having modern regulatory tools for effective regulation of AI and regulation for AI.

Co-Authored by Antara Vats and Sharad Sharma

A version of this was published on Financial Express, August 9th, 2023.

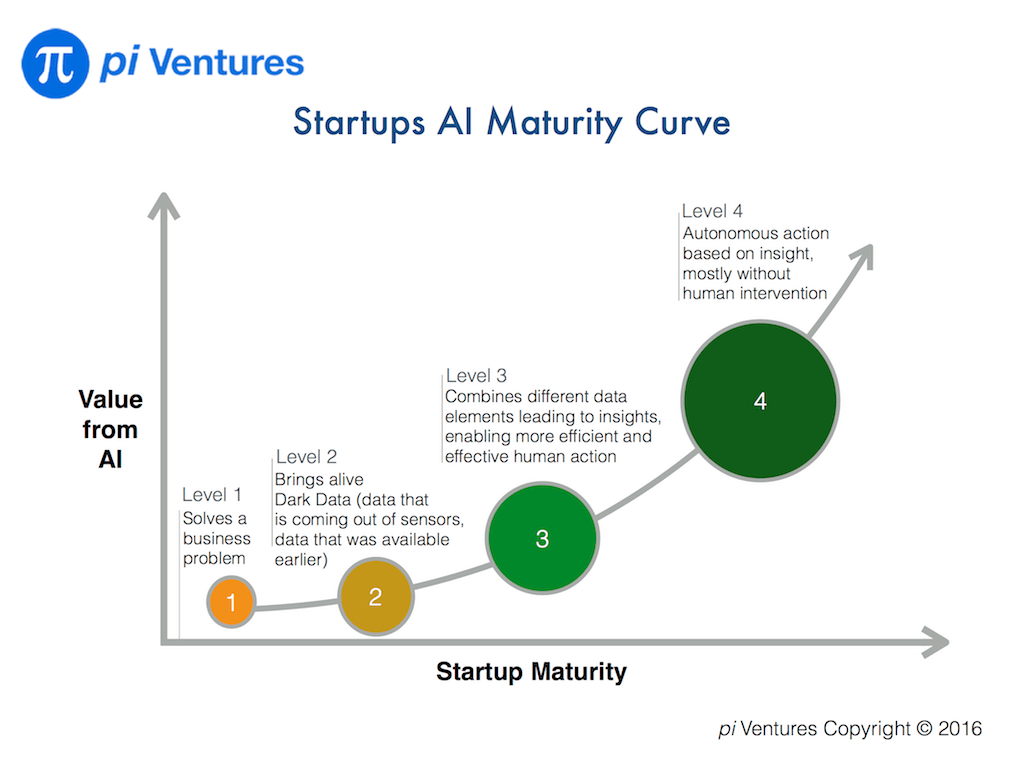

While the SaaS industry began over 2 decades ago, many say it is only now entering the teenage years. Similar to the surge of hormones which recently brought my teenage daughter face-to-face with her first pimple. And she is facing a completely new almost losing battle with creams and home remedies. In the same vein, convergence of

While the SaaS industry began over 2 decades ago, many say it is only now entering the teenage years. Similar to the surge of hormones which recently brought my teenage daughter face-to-face with her first pimple. And she is facing a completely new almost losing battle with creams and home remedies. In the same vein, convergence of

Our Maven:

Our Maven: