In the last decade, we’ve seen an extraordinary explosion in the volume of data that we, as a species, generate. The possibilities that this data-driven era unlocks are mind-boggling. Large language models, trained on vast datasets, are already capable of performing a wide array of tasks, from text completion to image generation and understanding. The potential applications of AI, especially for societal problems, are limitless. However, lurking in the shadows are significant concerns such as security and privacy, abuse and mis-information, fairness and bias.

These concerns have led to stringent data protection laws worldwide, such as the European Union’s General Data Protection Regulation (GDPR) and California’s Consumer Privacy Act (CCPA), and the European AI Act. India has recently joined this global privacy protection movement with the Data Protection and Privacy Act of 2023 (DPDP Act). These laws emphasize the importance of individuals’ right to privacy and the need for real-time, granular, and specific consent when sharing personal data.

In parallel with privacy laws, India has also adopted a techno-legal approach for data sharing, led by the Data Empowerment and Protection Architecture (DEPA). This new-age digital infrastructure introduces a streamlined and compliant approach to consent-driven data sharing.

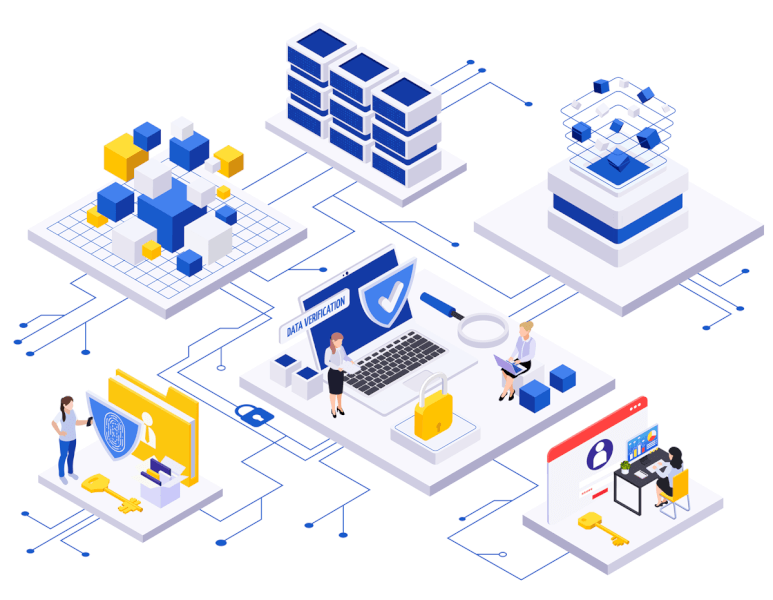

Today, we are taking the next step in this journey by extending DEPA to support training of AI models in accordance with responsible AI principles. This new digital public infrastructure, which we call DEPA for Training, is designed to address critical scenarios such as detecting fraud using datasets from multiple banks, helping with tracking and diagnosis of diseases, all without compromising the privacy of data principals.

DEPA for Training is founded on three core concepts, digital contracts, confidential clean rooms, and differential privacy. Digital contracts backed by transparent contract services make it simpler for organizations to share datasets and collaborate by recording data sharing agreements transparently. Confidential clean rooms ensure data security and privacy by processing datasets and training models in hardware protected secure environments. Differential privacy further fortifies this approach, allowing AI models to learn from data without risking individuals’ privacy. You can find more details how these concepts come together to create an open and fair ecosystem at https://depa.world.

DEPA for Training represents the first step towards a more responsible and secure AI landscape, where data privacy and technological advancement can thrive side by side. We believe that collaboration and feedback from experts, stakeholders, and the wider community are essential in shaping the future of this approach. Please share your feedback here

For more information, please visit depa.world

Please note: The blog post is authored by our volunteer, Kapil Vaswani